Artificial intelligence and machine learning have always enjoyed excellent publicity, but since image and text generators—among them DALL-E, Midjourney, and ChatGPT—entered our lives, there seems to be near consensus that AI is going to take over our lives.

Advertisement

For many of us, the term 'artificial intelligence' (AI) brings up images of science fiction, where a humanoid robot displays human-level intelligence (if you were born in the 80s or 90s, imagine it with an Austrian accent amid explosions). This is an example of general artificial intelligence, also known as "strong" artificial intelligence. This means a machine can do any cognitive task that a human can do (even though the question “What is intelligence?” is an open philosophical one that we will not address here). For obvious reasons, the media and other companies that work with this technology, make it seem mysterious, but experts in this field have long been saying: “Stop using the term artificial intelligence to describe everything. People talk as though computers can think, but they can't” [1]. Today’s machines are impressive and sophisticated, but they can only perform very specific tasks, and it has taken a lot of time and effort to design and train them to do these things. But how good are these machines at performing those tasks, and what are their limitations? We will talk about it in more detail in this post.

In the previous chapters of “Artificial Intelligence”: "Machine learning" is a general term for algorithms and mathematical analyses that enable a computer to generalize, or to make a decision about cases it has not previously encountered and for which it was not explicitly programmed. The process may be compared to a student learning from examples in the classroom and then using that knowledge to answer an unfamiliar exam question. Depending on the application, these algorithms may use mathematics from different fields like statistics and signal processing. In recent years, with the growth of data and enhanced computing power, algorithms based on artificial neural networks are becoming increasingly popular.

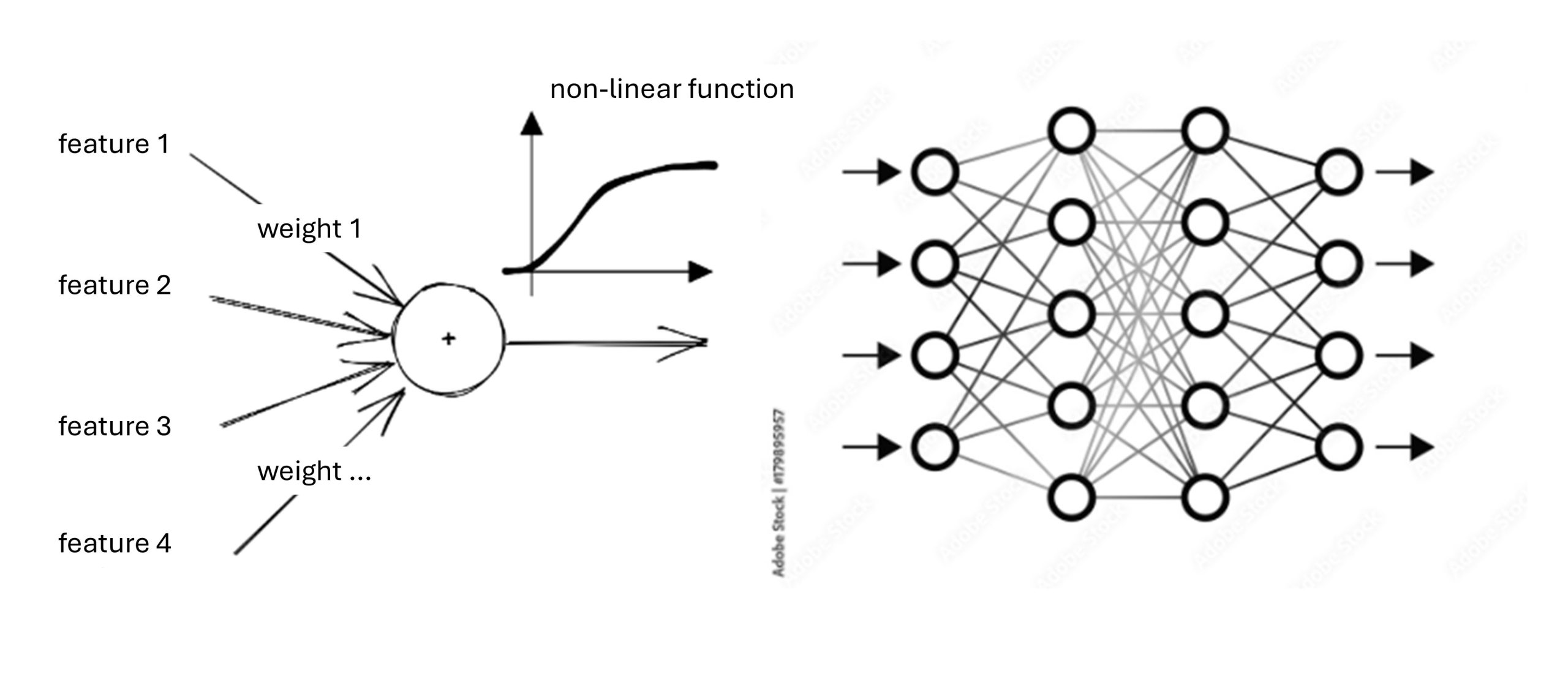

Although the term “neuron” is inspired by the brain, it actually refers to a fairly simple mathematical computation and constitutes the basic building block of all the sophisticated machines that we almost believed would soon destroy humanity. The neuron receives multiple numerical attributes (called features), multiplies each by a weight (thereby prioritizing their influence), and applies a non-linear activation function to the weighted sum (read more on why this is important here [2]). A network is formed by many interconnected neurons organized in layers (see Figure 1). Increasing the “depth” of the network (i.e., adding more layers) yields more complex connections, and this approach is known as deep learning [3].

Figure 1: A single neuron (left) and a neural network with several layers—each column is a layer (right)

During the training (or learning) phase, the network is exposed to numerous examples and adjusts the weights of each neuron to minimize the discrepancy between the network’s computed output and the actual result provided by the examples (this process is explained in [3]). This process highlights a significant limitation of the algorithm: its effectiveness is dependent on the quality of the training data and the examples it has been exposed to. Let us suppose that we train the network to distinguish between images of dogs and cats. Should we now input an image of a Ninja Turtle, for example, it is likely that the output will label it as either a cat or a dog. It is important to note that the structure described so far is a fairly basic network used widely, but additional structures (called “architectures”) exist for different applications.

Let us now focus on a machine that has recently been receiving a great deal of attention: OpenAI’s ChatGPT. It is a type of “bot” that allows free-text “conversations” and typically provides responses that are highly human-like. The structure of this machine differs considerably from the basic network described above. It is a Large Language Model, that uses a technique known as self-attention to analyze entire sentences and the relationships between words. This is achieved by encoding free text into a set of weights that represent features (for a detailed explanation, see [4]). The network was trained on vast amounts of internet text (mainly information current up to 2021) to predict the reply to the encoded text, which is then translated, using the same method, back into free text that appears human-generated. This is a critical point: ChatGPT is designed to predict the next word in a text. The system was not designed to evaluate the accuracy of its output, nor does it seek to do so. Therefore, when used to respond to general inquiries, the result is usually an impressive-sounding text, but the answers can often be uninformative or simply incorrect (a phenomenon called hallucination). For example, if the machine is tasked with providing citations in a specific field, it may present seemingly relevant titles and even familiar author names, but the papers may be non-existent. You can easily challenge it with slightly complex topics in which you have expertise and identify inaccuracies or outright nonsense.

Another serious issue that has come to light is that the machines are learning from information available on the internet, meaning that they are highly susceptible to human biases. As demonstrated in a “KAN” news item [5] when a machine is instructed to generate an image of a doctor, it produces a male image, however, when it is asked to create an image of a housekeeper, it produces a female image.

Further refinements have set ChatGPT apart from previous versions and similar algorithms, contributing to its well-deserved reputation. It is important to note that the training on internet text is further improved by human feedback [7] (using another machine-learning approach called Reinforcement Learning, which is a topic for a separate post). At this time, it would be premature to panic and look for a new career. Intelligent use of tools such as ChatGPT can be beneficial, however, it is important to keep in mind their constraints and not to rely on them for life-changing decisions.

Hebrew editing: Shir Rosenblum-Man

English editing: Gloria Volohonsky

References:

- A machine-learning expert opposes the use of the term “artificial intelligence”.

- On non-linear activation functions.

- Post on artificial neural networks and deep learning

- How a Large Language Model understands relationships within sentences

- Examples of bias in image generators

- More on what makes ChatGPT unique and learning from human feedback